Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

If you really want to start writing queries right now, there is a public sandbox here that will just work and guide you. You only need to have a Google account to be able to execute them, as this exposes our Jupyter notebook via the Colab environment. You are also free to download and use this notebook with any other provider or even your own local Jupyter and it will work just the same: the queries are all shipped to our own, small public backend no matter what. However, this may require a bit of configuration (JAVA_HOME pointing to Java 17 or 21, and if you have conflicting Spark installations in addition to pyspark, SPARK_HOME pointing to a Spark 4.0 installation).

If you do not have a Google account, you can also use our simpler sandbox page without Jupyter, here where you can type small queries and see the results.

With the sandboxes above, you can only inline your data in the query or access a dataset with an HTTP URL.

Once you want to take it to the next level and query your own data on your laptop, you will find instructions below to use RumbleDB on your own computer manually, which among others will allow you to query any files stored on your local disk. And then, you can take a leap of faith and use RumbleDB on a large cluster (Amazon EMR, your company's cluster, etc).

It is also possible to use RumbleDB with brew, however there is currently no way to adjust memory usage. To install RumbleDB with brew, type the commands:

You can test that it works with:

Then, launch a JSONiq shell with:

The RumbleDB shell appears:

You can now start typing simple queries like the following few examples. Press three times the return key to execute a query.

Any expression in JSONiq returns a sequence of items. Any variable in JSONiq is bound to a sequence of items. Items can be objects, arrays, or atomic values (strings, integers, booleans, nulls, dates, binary, durations, doubles, decimal numbers, etc). A sequence of items can be a sequence of just one item, but it can also be empty, or it can be as large as to contain millions, billions or even trillions of items. Obviously, for sequence longer than a billion items, it is a better idea to use a cluster than a laptop. A relational table (or more generally a data frame) corresponds to a sequence of object items sharing the same schema. However, sequences of items are more general than tables or data frames and support heterogeneity seamlessly.

When passing Python values to JSONiq or getting them from a JSONiq queries, the mapping to and from Python is as follows:

You can use RumbleDB from within Python programmes by running

Important note: since the jsoniq package depends on pyspark 4, Java 17 or Java 21 is a requirement. If another version of Java is installed, the execution of a Python program attempting to create a RumbleSession will lead to an error message on stderr that contains explanations.

You can control your Java version with:

Information about how this package is used can be found .

list

array item

str

string item

int

integer item

bool

boolean item

None

null item

Furthermore, other JSONiq types will be mapped to string literals. Users who want to preserve JSONiq types can use the Item API instead.

JSONiq is very powerful and expressive. You will find tutorials as well as a reference on JSONiq.org.

tuple

sequence of items

dict

object item

JSONiq 1.0 is the first version of the JSONiq language, currently in use.

It is a cousin of the XQuery 3.0 language and was developed by W3C XML Query Working Group members as a proposal of how to integrate JSON support into the language, while making it appealing to the JSON community, and making it easy for an existing XQuery engine to implement.

or

brew tap rumbledb/rumble

brew install --build-from-source rumblerumbledb run -q '1+1'rumbledb repl"Hello, World"Some users who have already configured a Spark installation on their machine may encounter a version issue if SPARK_HOME points to this alternate installation, and it is a different version of Spark (e.g., 3.5 or 3.4). The jsoniq package requires Spark 4.0.

If this happens, RumbleDB should output an informative error message. They are two ways to fix such conflicts:

The easiest is remove the SPARK_HOME environment variable completely. This will have RumbleDB fall back to the Spark 4.0 installation that ships with its pyspark dependency.

Or you can instead change the value of SPARK_HOME to point to a Spark 4.0 installation, if you have one. This would be for more advanced users who know what they are doing.

If you have another working Spark installation on your machine, you can see which version it is with

The above command is of course expected not to work for first-time users who only installed the jsoniq package and never installed Spark additionally on their machine.

pip install jsoniqjava -version ____ __ __ ____ ____

/ __ \__ ______ ___ / /_ / /__ / __ \/ __ )

/ /_/ / / / / __ `__ \/ __ \/ / _ \/ / / / __ | The distributed JSONiq engine

/ _, _/ /_/ / / / / / / /_/ / / __/ /_/ / /_/ / 2.0.0 "Lemon Ironwood" beta

/_/ |_|\__,_/_/ /_/ /_/_.___/_/\___/_____/_____/

Master: local[*]

Item Display Limit: 200

Output Path: -

Log Path: -

Query Path : -

rumble$ 1 + 1

(3 * 4) div 5

spark-submit --versionYou can use RumbleDB from within Python programmes by running

Important note: since the jsoniq package depends on pyspark 4, Java 17 or Java 21 is a requirement. If another version of Java is installed, the execution of a Python program attempting to create a RumbleSession will lead to an error message on stderr that contains explanations.

You can control your Java version with:

Information about how this package is used can be found in this section.

Some advanced users who have already configured a Spark installation on their machine may encounter a version issue if SPARK_HOME points to this alternate installation, and it is a different version of Spark (e.g., 3.5 or 3.4). The jsoniq package requires Spark 4.0.

If this happens, RumbleDB should output an informative error message. They are two ways to fix such conflicts:

The easiest is remove the SPARK_HOME environment variable completely. This will have RumbleDB fall back to the Spark 4.0 installation that ships with its pyspark dependency.

Or you can instead change the value of SPARK_HOME to point to a Spark 4.0 installation, if you have one. This would be for more advanced users who know what they are doing.

If you have another working Spark installation on your machine, you can see which version it is with

The above command is of course expected not to work for first-time users who only installed the jsoniq package and never installed Spark additionally on their machine.

A RumbleSession is a wrapper around a SparkSession that additionally makes sure the RumbleDB environment is in scope.

JSONiq queries are invoked with rumble.jsoniq() in a way similar to the way Spark SQL queries are invoked with spark.sql().

JSONiq variables can be bound to lists of JSON values (str, int, float, True, False, None, dict, list) or to Pyspark DataFrames. A JSONiq query can use as many variables as needed (for example, it can join between different collections).

It will later also be possible to read tables registered in the Hive metastore, similar to spark.sql(). Alternatively, the JSONiq query can also read many files of many different formats from many places (local drive, HTTP, S3, HDFS, ...) directly with simple such as json-lines(), text-file(), parquet-file(), csv-file(), etc.

The resulting sequence of items can be retrieved as a list of JSON values, as a Pyspark DataFrame, or, for advanced users, as an RDD or with a streaming iteration over the items using the .

It is also possible to write the sequence of items to the local disk, to HDFS, to S3, etc in a way similar to how DataFrames are written back by Pyspark.

The design goal is that it is possible to chain DataFrames between JSONiq and Spark SQL queries seamlessly. For example, JSONiq can be used to clean up very messy data and turn it into a clean DataFrame, which can then be processed with Spark SQL, spark.ml, etc.

Any feedback or error reports are very welcome.

There are many ways to install and use RumbleDB. For example:

By simply using one of our online sandboxes (Jupyter notebook or simple sandbox page)

Our newest library: by installing a pip package (pip install jsoniq)

By running the standalone RumbleDB jar with Java on your laptop

By installing with homebrew

By installing Spark yourself on your laptop (for more control on Spark parameters) and use a small RumbleDB jar with spark-submit

By using our docker image on your laptop (go to the "Run with docker" section on the left menu)

By uploading the small RumbleDB jar to an existing Spark cluster (such as AWS EMR)

By running RumbleDB as an HTTP server in the background and connecting to it in a Jupyter notebook with the %%jsoniq magic.

By installing it manually on your machine.

After installing RumbleDB, further steps could involve:

Learning JSONiq. More details can be found in the JSONiq section of this documentation and in the and .

Storing some data on S3, creating a Spark cluster on Amazon EMR (or Azure blob storage and Azure, etc), and querying the data with RumbleDB. More details are found in the cluster section of this documentation.

Using RumbleDB with Jupyter notebooks. For this, you can run RumbleDB as a server with a simple command, and get started by downloading the and just clicking your way through it. More details are found in the Jupyter notebook section of this documentation. Jupyter notebooks work both locally and on a cluster.

You need to make sure that you have Java 11 or 17 and that, if you have several versions installed, JAVA_HOME correctly points to Java 11 or 17.

RumbleDB works with both Java 11 and Java 17. You can check the Java version that is configured on your machine with:

If you do not have Java, you can download version 11 or 17 from .

Do make sure it is not Java 8, which will not work.

The Python edition of Rumble can be used to directly write JSONiq queries in Jupyter notebook cells. This is explained . You first need to install the library as described .

pip install jsoniqjava -versionJSONiq is a query and processing language specifically designed for the popular JSON data model. The main ideas behind JSONiq are based on lessons learned in more than 30 years of relational query systems and more than 15 years of experience with designing and implementing query languages for semi-structured data like XML and RDF.

The main source of inspiration behind JSONiq is XQuery, which has been proven so far a successful and productive query language for semi-structured data (in particular XML). JSONiq borrowed a large numbers of ideas from XQuery, like the structure and semantics of a FLWOR construct, the functional aspect of the language, the semantics of comparisons in the face of data heterogeneity, the declarative, snapshot-based updates. However, unlike XQuery, JSON is not concerned with the peculiarities of XML, like mixed content, ordered children, the confusion between attributes and elements, the complexities of namespaces and QNames, or the complexities of XML Schema, and so on.

The power of the XQuery's FLWOR construct and the functional aspect, combined with the simplicity of the JSON data model result in a clean, sleek and easy to understand data processing language. As a matter of fact, JSONiq is a language that can do more than queries: it can describe powerful data processing programs, from transformations, selections, joins of heterogeneous data sets, data enrichment, information extraction, information cleaning, and so on.

Technically, the main characteristics of JSONiq (and XQuery) are the following:

It is a set-oriented language. While most programming languages are designed to manipulate one object at a time, JSONiq is designed to process sets (actually, sequences) of data objects.

It is a functional language. A JSONiq program is an expression; the result of the program is the result of the evaluation of the expression. Expressions have fundamental role in the language: every language construct is an expression, and expressions are fully composable.

It is a declarative language. A program specifies what is the result being calculated, and does not specify low level algorithms like the sort algorithm, the fact that an algorithm is executed in main memory or is external, on a single machine or parallelized on several machines, or what access patterns (aka indexes) are being used during the evaluation of the program. Such implementation decisions should be taken automatically, by an optimizer, based on the physical characteristics of the data, and of the hardware environment. Just like a traditional database would do. The language has been designed from day one with optimizability in mind.

It is designed for nested, heterogeneous, semi-structured data. Data structures in JSON can be nested with arbitrary depth, do not have a specific type pattern (i.e. are heterogeneous), and may or may not have one or more schemas that describe the data. Even in the case of a schema, such a schema can be open, and/or simply partially describe the data. Unlike SQL, which is designed to query tabular, flat, homogeneous structures. JSONiq has been designed from scratch as a query for nested and heterogeneous data.

RumbleDB is a querying engine that allows you to query your large, messy datasets with ease and productivity. It covers the entire data pipeline: clean up, structure, normalize, validate, convert to an efficient binary format, and feed it right into Machine Learning estimators and models, all within the JSONiq language.

RumbleDB supports JSON-like datasets including JSON, JSON Lines, Parquet, Avro, SVM, CSV, ROOT as well as text files, of any size from kB to at least the two-digit TB range (we have not found the limit yet).

RumbleDB is both good at handling small amounts of data on your laptop (in which case it simply runs locally and efficiently in a single-thread) as well as large amounts of data by spreading computations on your laptop cores, or onto a large cluster (in which case it leverages Spark automagically).

RumbleDB can also be used to easily and efficiently convert data from a format to another, including from JSON to Parquet thanks to JSound validation.

It runs on many local or distributed filesystems such as HDFS, S3, Azure blob storage, and HTTP (read-only), and of course your local drive as well. You can use any of these file systems to store your datasets, but also to store and share your queries and functions as library modules with other users, worldwide or within your institution, who can import them with just one line of code. You can also output the results of your query or the log to these filesystems (as long as you have write access).

With RumbleDB, queries can be written in the tailor-made and expressive JSONiq language. Users can write their queries declaratively and start with just a few lines. No need for complex JSON parsing machinery as JSONiq supports the JSON data model natively.

The core of RumbleDB lies in JSONiq's FLWOR expressions, the semantics of which map beautifully to DataFrames and Spark SQL. Likewise expression semantics is seamlessly translated to transformations on RDDs or DataFrames, depending on whether a structure is recognized or not. Transformations are not exposed as function calls, but are completely hidden behind JSONiq queries, giving the user the simplicity of an SQL-like language and the flexibility needed to query heterogeneous, tree-like data that does not fit in DataFrames.

This documentation provides you with instructions on how to get started, examples of data sets and queries that can be executed locally or on a cluster, links to JSONiq reference and tutorials, notes on the function library implemented so far, and instructions on how to compile RumbleDB from scratch.

Please note that this is a (maturing) beta version. We welcome bug reports in the GitHub issues section.

At the end of an updating program, the resulting PUL is applied with upd:applyUpdates (part of the XQuery Update Facility standard), which is extended as follows:

First, before applying any update, each update primitive (except the jupd:insert-into-object primitives, which do not have any target) locks onto its target by resolving the selector on the object or array it updates. If the selector is resolved to the empty sequence, the update primitive is ignored in step 2. After this operation, each of these update primitives will contain a reference to either the pair (for an object) or the value (for an array) on or relatively to which it operates.

Then each update primitive is applied, using the target references that were resolved at step 1. The order in which they are applied is not relevant and does not affect the final instance of the data model. After applying all updates, an error jerr:JNUP0006 is raised upon pair name collision within the same object.

RumbleDB is just a download and no installation is required.

In order to run RumbleDB, you simply need to download rumbledb-2.0.0-standalone.jar from the download page and put it in a directory of your choice, for example, right besides your data.

Make sure to use the corresponding jar name accordingly in all our instructions in lieu of rumbledb.jar.

You can test that it works with:

or launch a JSONiq shell with:

If you run out of memory, you can set allocate more memory to Java with an additional Java parameter, e.g., -Xmx10g

The RumbleDB shell appears:

You can now start typing simple queries like the following few examples. Press three times the return key to execute a query.

or

or

Javadoc

If you plan to add the jar to your Java environment to use RumbleDB in your Java programs, the JavaDoc documentation can be found here. The entry point is the class org.rumbledb.api.Rumble.

java -versionspark-submit --versionjava -jar rumbledb-2.0.0-standalone.jar run -q '1+1'java -jar rumbledb-2.0.0-standalone.jar repl ____ __ __ ____ ____

/ __ \__ ______ ___ / /_ / /__ / __ \/ __ )

/ /_/ / / / / __ `__ \/ __ \/ / _ \/ / / / __ | The distributed JSONiq engine

/ _, _/ /_/ / / / / / / /_/ / / __/ /_/ / /_/ / 2.0.0 "Lemon Ironwood" beta

/_/ |_|\__,_/_/ /_/ /_/_.___/_/\___/_____/_____/

Master: local[*]

Item Display Limit: 200

Output Path: -

Log Path: -

Query Path : -

rumble$"Hello, World" 1 + 1

(3 * 4) div 5

This method gives you more control about the Spark configuration than the experimental standalone jar, in particular you can increase the memory used, change the number of cores, and so on.

If you use Linux, Florian Kellner also kindly contributed an installation script for Linux users that roughly takes care of what is described below for you.

RumbleDB requires an Apache Spark installation on Linux, Mac or Windows. Important note: it needs to be either Spark 4, or the Scala 2.13 build of Spark 3.5.

It is straightforward to directly , unpack it and put it at a location of your choosing. We recommend to pick Spark 4.0.0.

You then need to point the SPARK_HOME environment variable to this directory, and to additionally add the subdirectory "bin" within the unpacked directory to the PATH variable. On macOS this is done by adding.

(with SPARK_HOME appropriately set to match your unzipped Spark directory) to the file .zshrc in your home directory, then making sure to force the change with

in the shell. In Windows, changing the PATH variable is done in the control panel. In Linux, it is similar to macOS.

As an alternative, users who love the command line can also install Spark with a package management system instead, such as brew (on macOS) or apt-get (on Ubuntu). However, these might be less predictable than a raw download.

You can test that Spark was correctly installed with:

You need to make sure that you have Java 11 (for Spark 3.5) or 17 (for Spark 3.5 or 4.0) or 21 (for Spark 4.0) and that, if you have several versions installed, JAVA_HOME correctly points to the correct Java installation. Spark only supports Java 11 or 17 or 21 depending on the version.

Spark 4+ is documented to work with both Java 17 and Java 21. If there is an issue with the Java version, RumbleDB will inform you with an appropriate error message. You can check the Java version that is configured on your machine with:

Like Spark, RumbleDB is just a download and no installation is required.

In order to run RumbleDB, you simply need to download one of the small .jar files from the and put it in a directory of your choice, for example, right besides your data.

If you use Spark 3.5, use rumbledb-2.0.0-for-spark-3.5-scala-2.13.jar.

If you use Spark 4.0, use rumbledb-2.0.0-for-spark-4.0.jar.

These jars do not embed Spark, since you chose to set it up separately. They will work with your Spark installation with the spark-submit command.

Make sure to use the corresponding jar name accordingly in all our instructions in lieu of rumbledb.jar, replacing rumbledb.jar with the actual name of the jar file you downloaded.

In a shell, from the directory where the RumbleDB .jar lies, type, all on one line:

replacing rumbledb.jar with the actual name of the jar file you downloaded.

The RumbleDB shell appears:

You can now start typing simple queries like the following few examples. Press three times the return key to execute a query.

or

or

RumbleDB can be run as an HTTP server that listens for queries. In order to do so, you can use the --server and --port parameters:

This command will not return until you force it to (Ctrl+C on Linux and Mac). This is because the server has to run permanently to listen to incoming requests.

Most users will not have to do anything beyond running the above command. For most of them, the next step would be to open a Jupyter notebook that connects to this server automatically.

This HTTP server is built as a basic server for the single user use case, i.e., the user runs their own RumbleDB server on their laptop or cluster, and connects to it via their Jupyter notebook, one query at a time. Some of our users have more advanced needs, or have a larger user base, and typically prefer to implement their own HTTP server, lauching RumbleDB queries either via the public RumbleDB Java API (like the basic HTTP server does -- so its code can serve as a demo of the Java API) or via the RumbleDB CLI.

Caution! Launching a server always has consequences on security, especially as RumbleDB can read from and write to your disk; So make sure you activate your firewall. In later versions, we may support authentication tokens.

The HTTP server is meant not to be used directly by end users, but instead to make it possible to integrate RumbleDB in other languages and environments, such as Python and Jupyter notebooks.

To test that the server is running, you can try the following address in your browser, assuming you have a query stored locally at /tmp/query.jq. All queries have to go to the /jsoniq path.

The request returns a JSON object, and the resulting sequence of items is in the values array.

Almost all parameters from the command line are exposed as HTTP parameters.

A query can also be submitted in the request body:

With the HTTP server running, if you have installed Python and Jupyter notebooks (for example with the Anaconda data science package that does all of it automatically), you can create a RumbleDB magic by just executing the following code in a cell:

Where, of course, you need to adapt the port (8001) to the one you picked previously.

Then, you can execute queries in subsequent cells with:

or on multiple lines:

You can also let RumbleDB run as an HTTP server on the master node of a cluster, e.g. on Amazon EMR or Azure. You just need to:

Create the cluster (it is usually just the push of a few buttons in Amazon or Azure)

Wait for a few minutes

Make sure that your own IP has incoming access to EMR machines by configuring the security group properly. You usually only need to do so the first time you set up a cluster (if your IP address remains the same), because the security group configuration will be reused for future EMR clusters.

Then there are two options

Connect to the master with SSH with an extra parameter for securely tunneling the HTTP connection (for example -L 8001:localhost:8001 or any port of your choosing)

Download the RumbleDB jar to the master node

wget https://github.com/RumbleDB/rumble/releases/download/v1.24.0/rumbledb-1.24.0.jar

Launch the HTTP server on the master node (it will be accessible under http://localhost:8001/jsoniq).

There is also another way that does not need any tunnelling: you can specify the hostname of your EC2 machine (copied over from the EC2 dashboard) with the --host parameter. For example, with the placeholder :

You also need to make sure in your EMR security group that the chosen port (e.g., 8001) is accessible from the machine in which you run your Jupyter notebook. Then, you can point your Jupyter notebook on this machine to http://<ec2-hostname>:8001/jsoniq.

Be careful not to open this port to the whole world, as queries can be sent that read and write to the EC2 machine and anything it has access to (like S3).

There are several ways to get back the output of the JSONiq query. There are many examples of use further down this page.

availableOutputs()

Returns a list that helps you understand which output methods you can call. The strings in this list can be Local, RDD, DataFrame, or PUL.

-

json()

Returns the results as a tuple containing dicts, lists, strs, ints, floats, booleans, Nones.

Local

RumbleDB can work out of the box with pandas DataFrames, both as input and (when the output has a schema) as output.

bind() also accepts pandas dataframes

data = {'Name': ['Alice', 'Bob', 'Charlie'],

'Age': [30,25,35]};

pdf = pd.DataFrame(data);

rumble.bind('$a',pdf);

seq = rumble.jsoniq('$a.Name')The same goes for extra named parameters.

data = {'Name': ['Alice', 'Bob', 'Charlie'],

'Age': [30,25,35]};

pdf = pd.DataFrame(data);

seq = rumble.jsoniq('$a.Name', a=pdf)It is also possible to get the results back as a pandas dataframe with pdf() (if the output has a schema, which you can check by calling availableOutputs() and seeing if "DataFrame" is in the returned list).

We show here how to install RumbleDB from the GitHub repository and build it yourself if you wish to do so (for example, to use the latest master). However, the easiest way to use RumbleDB is to simply download the already compiled .jar files.

The following software is required:

: the version of Java is important, as RumbleDB only works with Java 11 (Standalone or Spark 3.5), 17 (Standalone or Spark 3.5 or Spark 4 or Python) or 21 (Spark 4 or Python). The current master branch corresponds to Spark 4.0, meaning that Java 17 or 21 is required.

The syntax to start a session is similar to that of Spark. A RumbleSession is a SparkSession that additionally knows about RumbleDB. All attributes and methods of SparkSession are also available on RumbleSession.

Even though RumbleDB uses Spark internally, it can be used without any knowledge of Spark.

Executing a query is done with rumble.jsoniq() like so.

A query returns a sequence of items, here the sequence with just the integer item 2.

There are several ways to retrieve the results of the query. Calling the json() is just one of them. It retrieves the sequence of as a tuple of JSON values that Python can process. The detailed . Other methods for .

RumbleDB can work out of the box with pyspark DataFrames, both as input and (when the output has a schema) as output.

The power users can also interface our library with pyspark DataFrames. JSONiq sequences of items can have billions of items, and our library supports this out of the box: it can also run on clusters on AWS Elastic MapReduce for example. But your laptop is just fine, too: it will spread the computations on your cores. You can bind a DataFrame to a JSONiq variable. JSONiq will recognize this DataFrame as a sequence of object items.

Creating a data frame also similar to Spark (but using the rumble object).

This is how to bind a JSONiq variable to a dataframe. You can bind as many variables as you want.

It is possible to bind a JSONiq variable to a tuple of native Python values and then use it in a query. JSONiq, variables are bound to sequences of items, just like the results of JSONiq queries are sequence of items. A Python tuple will be seamlessly converted to a sequence of items by the library. Currently we only support strs, ints, floats, booleans, None, and (recursively) lists and dicts. But if you need more (like date, bytes, etc) we will add them without any problem. JSONiq has a rich type system.

Values can be passed with extra named parameters, like so.

It is also possible to bind variables more durably (across multiple jsoniq() calls) with bind().

It is possible to bind only one value. The it must be provided as a singleton tuple. This is because in JSONiq, an item is the same a sequence of one item.

For convenience and code readability, you can also use bindOne().

A variable that was durably bound with bind() or bindOne() can be unbound with unbind().

The Python edition of RumbleDB comes out of the box with a JSONiq magic.

If you are in a Jupyter notebook and have installed the jsoniq pip package, you can activate the jsoniq magic with:

Then, you can run JSONiq in standalone cells and see the results:

Of course, you can still continue to use rumble.jsoniq() calls and process the outputs as you see fit.

An example of the magic in action is available in our .

Note: This is a different magic than the magic that works with the RumbleDB HTTP server. It is more modern and running a server is no longer needed with this different magic. It suffices to install the jsoniq Python package.

Generally, it is possible to write output by to disk using the pandas DataFrame API, the pyspark DataFrame API, or Python's library to write JSON values to disk.

For convenience, we provide a way to also directly do so with the sequence object output by the query.

it is possible to write the output to a file locally or on a cluster. The API is similar to that of Spark dataframes. Note that it creates a directory and stores the (potentially very large) output in a sharded directory. RumbleDB was already tested with up to 64 AWS machines and 100s of TBs of data.

Of course the examples below are so small that it makes more sense to process the results locally with Python, but this shows how GBs or TBs of data obtained from JSONiq can be written back to disk.

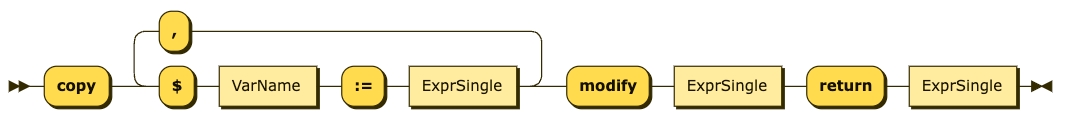

Updates can be applied to a clone of an existing instance with the expression.

The content of the modify clause may build a complex Pending Update List with multiple updates. Remember that, with snapshot semantics, each update is applied against the initial snapshot, and updates do not see each other's effects.

Updating expression can also be combined with conditional expressions (in the then and else clauses), switch expressions (in the return clauses), FLWOR expressions (in the return clause), etc for more powerful queries based on patterns in the available data (from any source visible to the JSONiq query).

The updates generated inside the modify clause may only target the cloned object, i.e., the variable specified in the copy clause.

Example 191. JSON copy-modify-return expression

Result: { "foo" : true, "bar" : 123, "foobar" : [ true, false ] }

It is possible to access RumbleDB's advanced configuration parameters with

Then, you can change the value of some parameters. For example, you can increase the number of JSON values that you can retrieve with a json() call:

You can also configure RumbleDB to output verbose information about the internal query plan, type and mode detection, and optimizations. This can be of interest to data engineers or researchers to understand how RumbleDB works.

The complete API for configuring RumbleDB is accessible in our pages. These methods are also callable in Python.

Warning: some of the configuration methods do not make sense in Python and are specific to the command line edition of RumbleDB (such as setting the query content or an output path and input/output format). Also, setting external variables in Python should not be done via the configuration, but with the bind() and unbind() functions or extra parameters in jsoniq() calls.

RumbleDB uses the following software:

ANTLR v4 Framework - BSD License

Apache Commons Text - Apache License

Apache Commons Lang - Apache License

JSONiq follows the and introduces update primitives and update expressions specific to JSON data.

In JSONiq, updates are not immediately applied. Rather, a snapshot of the current data is taken, and a list of updates, called the Pending Update List, is collected. Then, upon explicit request by the user (via specific expressions), the Pending Update List is applied atomically, leading to a new snapshot. It is also possible for an engine to persist (to the local disk, to a database management system, to a data lake...) the resulting Pending Update List after a query has been completed.

In the middle of a program, several PULs can be produced against the same snapshot. They are then merged with upd:mergeUpdates (part of the XQuery Update Facility standard), which is extended as follows.

Several deletes on the same object are replaced with a unique delete on that object, with a list of all selectors (names) to be deleted, where duplicates have been eliminated.

Several deletes on the same array and selector (position) are replaced with a unique delete on that array and with that selector.

Several inserts on the same array and selector (position) are equivalent to a unique insert on that array and selector with the content of those original inserts appended in an implementation-dependent order (like XQUF).

Apache HTTP client - Apache License

gson - Apache License

JLine terminal framework - BSD License

Kryo serialization framework - BSD License

Laurelin (ROOT parser) - BSD-3

Spark Libraries - Apache License

As well as the JSONiq language - CC BY-SA 3.0 License

Several inserts on the same object are equivalent to a unique insert where the objects containing the pairs to insert are merged. An error jerr:JNUP0005 is raised if a collision occurs.

Several replaces on the same object or array and with the same selector raise an error jerr:JNUP0009.

Several renames on the same object and with the same selector raise an error jerr:JNUP0010.

If there is a replace and a delete on the same object or array and with the same selector, the replace is omitted in the merged PUL.

If there is a rename and a delete on the same object or array and with the same selector, the rename is omitted in the merged PUL.

Sequence length below the materialization cap. The default is 200 but it can be increased in the RumbleDB configuration.

df()

Returns the results as a pyspark data frame

DataFrame (i.e., RumbleDB was able to infer an output schema)

No limitation, but beyond a billion items, you should use a Spark cluster.

pdf()

Returns the results as a pandas data frame

DataFrame (i.e., RumbleDB was able to infer an output schema)

Should fit in your computer's memory.

rdd()

Returns the results as an RDD containing dicts, lists, strs, ints, floats, booleans, Nones (experimental)

RDD

No limitation, but beyond a billion items, you should use a Spark cluster.

items()

Returns the results as a list containing Java Item objects that can be queried with the RumbleDB Item API. Will contain more information and more accurate typing.

Local

Sequence length below the materialization cap. The default is 200 but it can be increased in the RumbleDB configuration.

open(), hasNext(), nextJSON(), close()

Allows streaming (with no limitation of length) through individuals items as dicts, lists, strs, ints, floats, booleans, Nones.

Local

No limitation, as long as you go through the stream without saving all past items.

open(), hasNext(), next(), close()

Allows streaming (with no limitation of length) through individuals items as Java Item objects that can be queried with the RumbleDB Item API. Will contain more information and more accurate typing.

Local

No limitation, as long as you go through the stream without saving all past items.

applyUpdates()

Persists the Pending Update List produced by the query (to the Delta Lake or a table registered in the Hive metastore).

PUL

-

JSONiq 3.1 is an initiative of the RumbleDB team that aligns JSONiq more closely with XQuery 3.1, which has now become a W3C recommendation, but keeping what makes it JSONiq: the flagship feature being the ability to copy-paste JSON into a JSONiq query and with a navigation syntax that appeals to the JSON community.

JSONiq 3.1 does not require a distinct data model (JDM) since XQuery 3.1 support maps and arrays. As a result, JSONiq 3.1 objects are the same as XQuery 3.1 maps and JSONiq 3.1 arrays are the same as XQuery 3.1 arrays.

JSONiq 3.1 does not require a separate serialization mechanism, since XQuery 3.1 supports the JSON output method.

JSONiq 3.1 benefits from all the map and object builtin functions defined in XQuery 3.1.

JSONiq 3.1 is fully interoperable with XQuery 3.1 and can execute on the same virtual machine (similar to Scala and Java).

This also paves the way for JSONiq 4.0 which will also be aligned with XQuery 4.0 as much as is technically possible.

As a result, the specification for JSONiq 3.1 is even more minimal than that of JSONiq 1.0. This makes it easy to support for any existing XQuery engine to step into the JSON community.

RumbleDB is slowly deploying the use of JSONiq 3.1 but it will take some time as we make sure to sweep in all corners.

In JSONiq 3.1, the context item is obtained through $$ and not through a dot.

String literals use JSON escaping instead of XML escaping (backslash, not ampersand).

In map (object) constructors, the "map" keyword in front is optional.

A name test must be prefixed with $$/ and cannot stand on its own.

true and false exist as literals and do not have to be obtained through function calls (true(), false()).

null exists as a literal and stands for the empty sequence.

The dot . and double square brackets [[ ]] act as syntactic sugars for ? lookup.

The data model standardized by the W3C working group is more generic and allows for atomic object keys that are not necessarily strings (dates, etc). Also, an object value or an array value can be a sequence of items and does not need to be a single item. The particular case in which object keys are strings and values are single items (or empty) corresponds to the JSON use.

Null does not exist as its own type in JSONiq 3.1, instead it is mapped to the empty sequence.

There are other minor changes in semantics that correspond to the alignment with XQuery 3.1 such as Effective Boolean Values, comparison, etc.

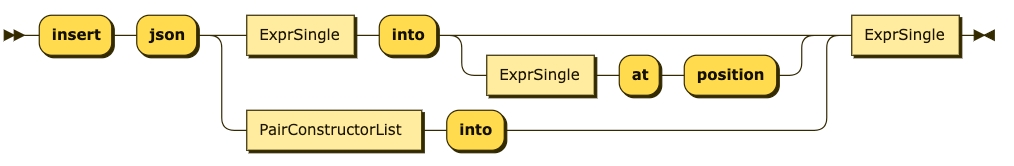

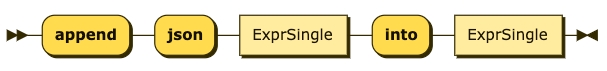

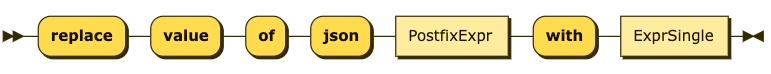

The JSON update syntax was not integrated yet into the core language. This is planned, and the syntax will be simplified (no json keyword, dot lookup allowed here as well).

The semantics for the JSON serialization method is the same as in the JSONiq Extension to XQuery. It is still under discussion how to escape special characters with the Text output method.

And then use Jupyter notebooks in the same way you would do it locally (it magically works because of the tunneling)

print(seq.pdf())print(rumble.jsoniq("""

for $v in $c

let $parity := $v mod 2

group by $parity

return { switch($parity)

case 0 return "even"

case 1 return "odd"

default return "?" : $v

}

""", c=(1,2,3,4, 5, 6)).json())

print(rumble.jsoniq("""

for $i in $c

return [

for $j in $i

return { "foo" : $j }

]

""", c=([1,2,3],[4,5,6])).json())

print(rumble.jsoniq('{ "results" : $c.foo[[2]] }',

c=({"foo":[1,2,3]},{"foo":[4,{"bar":[1,False, None]},6]})).json())rumble.bind('$c', (1,2,3,4, 5, 6))

print(rumble.jsoniq("""

for $v in $c

let $parity := $v mod 2

group by $parity

return { switch($parity)

case 0 return "even"

case 1 return "odd"

default return "?" : $v

}

""").json())

print(rumble.jsoniq("""

for $v in $c

let $parity := $v mod 2

group by $parity

return { switch($parity)

case 0 return "gerade"

case 1 return "ungerade"

default return "?" : $v

}

""").json())

rumble.bind('$c', ([1,2,3],[4,5,6]))

print(rumble.jsoniq("""

for $i in $c

return [

for $j in $i

return { "foo" : $j }

]

""").json())

rumble.bind('$c', ({"foo":[1,2,3]},{"foo":[4,{"bar":[1,False, None]},6]}))

print(rumble.jsoniq('{ "results" : $c.foo[[2]] }').json())seq = rumble.jsoniq("$a.Name");

seq.write().mode("overwrite").json("outputjson");

seq.write().mode("overwrite").parquet("outputparquet");

seq = rumble.jsoniq("1+1");

seq.write().mode("overwrite").text("outputtext");Spark, version 4.0.0 (for example)

Ant, version 1.10

Maven 3.9.9

Type the following commands to check that the necessary commands are available. If not, you may need to either install the software, or make sure that it is on the PATH.

You first need to download the rumble code to your local machine.

In the shell, go to the desired location:

Clone the github repository:

Go to the root of this repository:

You can compile the entire project like so:

After successful completion, you can check the target directory, which should contain the compiled classes as well as the JAR file rumbledb-2.0.0-jar-with-dependencies.jar.

The most straightforward to test if the above steps were successful is to run the RumbleDB shell locally, like so:

The RumbleDB shell should start:

You can now start typing interactive queries. Queries can span over multiple lines. You need to press return 3 times to confirm.

This produces the following results (>>> show the extra, empty lines that appear on the first two presses of the return key).

You can try a few more queries.

This is it. RumbleDB is setup and ready to go locally. You can now move on to a JSONiq tutorial. A RumbleDB tutorial will also follow soon.

You can also try to run the RumbleDB shell on a cluster if you have one available and configured -- this is done with the same command, as the master and deployment mode are usually already set up in cloud-managed clusters. More details are provided in the rest of the documentation.

Below are a few examples showing what is possible with JSONiq. You can learn JSONiq with our interactive tutorial. You will also find a full language reference here as well as a list of builtin functions.

For complex queries, you can use Python's ability to spread strings over multiple lines, and with no need to escape special characters.

from jsoniq import RumbleSession

rumble = RumbleSession.builder.getOrCreate();

items = rumble.jsoniq('1+1')

python_tup = items.json()

print(python_tup)You can also, instead of the bind() call, pass the pyspark DataFrame directly in jsoniq() with an extra named parameter:

There are several ways to collect the outputs, depending on the user needs but also on the query supplied. The following method returns a list containing one or several of "DataFrame", "RDD", "PUL", "Local".

If DataFrame is in the list, df() can be invoked.

If RDD is in the list, rdd() can be invoked.

If Local is the list, items() or json() can be invokved, as well as the local iterator API.

If the output of the JSONiq query is structured (i.e., RumbleDB was able to detect a schema), then we can extract a regular data frame that can be further processed with spark.sql() or rumble.jsoniq().

We are continuously working on the detection of schemas and RumbleDB will get better at it with them. JSONiq is a very powerful language and can also produce heterogeneous output "by design". Then you need to use rdd() instead of df(), or to collect the list of JSON values (see further down). Remember that availableOutputs() tells you what is at your disposal.

A DataFrame output by JSONiq can be reused as input to a Spark SQL query.

(Remember that rumble is a wrapper around a SparkSession object, so you can use rumble.sql() just like spark.sql())

A DataFrame output by Spark SQL can be reused as input to a JSONiq query.

And a DataFrame output by JSONiq can be reused as input to another JSONiq query.

data = [("Alice", 30), ("Bob", 25), ("Charlie", 35)];

columns = ["Name", "Age"];

df = spark.createDataFrame(data, columns);rumble.bind('$a', df);By default, the output will be in the form of serialized JSON values. If the output is structured, then you can change this default behavior to show it in the form of a DataFrame instead.

For a pandas DataFrame:

For a pyspark DataFrame:

Note that it will not work in all cases. If the output is not fully structured or RumbleDB is unable to infer a DataFrame schema, you can specify the schema yourself. The schema language is called JSound and you will find a tutorial here.

It is possible to measure the response time with the -t parameter:

%load_ext jsoniqmagic%%jsoniq

{"foobar":1} If you get an out-of-memory error, it is possible to allocate memory when you build the Rumble session with a config() call. This is exactly the same way it is done when building a Spark session. The config() call can of course be used in combination with any other method calls that are part of the builder chain (withDelta(), appName(), config(), etc).

For example:

conf = rumble.getRumbleConf()conf.setResultSizeCap(1000)export SPARK_HOME=/path/to/spark-4.0.0-bin-hadoop3

export PATH=$SPARK_HOME/bin:$PATH. ~/.zshrcspark-submit --versionjava -versionspark-submit rumbledb.jar repl ____ __ __ ____ ____

/ __ \__ ______ ___ / /_ / /__ / __ \/ __ )

/ /_/ / / / / __ `__ \/ __ \/ / _ \/ / / / __ | The distributed JSONiq engine

/ _, _/ /_/ / / / / / / /_/ / / __/ /_/ / /_/ / 2.0.0 "Lemon Ironwood" beta

/_/ |_|\__,_/_/ /_/ /_/_.___/_/\___/_____/_____/

Master: local[*]

Item Display Limit: 200

Output Path: -

Log Path: -

Query Path : -

rumble$"Hello, World" 1 + 1

(3 * 4) div 5

spark-submit rumbledb.jar serve -p 8001http://localhost:8001/jsoniq?query-path=/tmp/query.jq{ "values" : [ "foo", "bar" ] }curl -X POST --data '1+1' http://localhost:8001/jsoniq!pip install rumbledb

%load_ext rumbledb

%env RUMBLEDB_SERVER=http://localhost:8001/jsoniq%jsoniq 1 + 1%%jsoniq

for $doc in json-lines("my-file")

where $doc.foo eq "bar"

return $doc

spark-submit rumbledb.jar serve -p 8001 -h <ec2-hostname>rumble.bind('$c', (42,))

print(rumble.jsoniq('for $i in 1 to $c return $i*$i').json())rumble.bindOne('$c', 42)

print(rumble.jsoniq('for $i in 1 to $c return $i*$i').json())rumble.unbind('$c')$ java -version

$ mvn --version

$ ant -version

$ spark-submit --version$ cd some_directory$ git clone https://github.com/RumbleDB/rumble.git$ cd rumble$ mvn clean compile assembly:single$ spark-submit target/rumbledb-2.0.0-with-dependencies.jar replUsing Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

____ __ __ ____ ____

/ __ \__ ______ ___ / /_ / /__ / __ \/ __ )

/ /_/ / / / / __ `__ \/ __ \/ / _ \/ / / / __ | The distributed JSONiq engine

/ _, _/ /_/ / / / / / / /_/ / / __/ /_/ / /_/ / 2.0.0 "Lemon Ironwood" beta

/_/ |_|\__,_/_/ /_/ /_/_.___/_/\___/_____/_____/

Master: local[2]

Item Display Limit: 1000

Output Path: -

Log Path: -

Query Path : -

rumble$rumble$ "Hello, world!"rumble$ "Hello, world!"

>>>

>>>

Hello, worldrumble$ 2 + 2

>>>

>>>

4

rumble$ 1 to 10

>>>

>>>

( 1, 2, 3, 4, 5, 6, 7, 8, 9, 10)seq = rumble.jsoniq("""

let $stores :=

[

{ "store number" : 1, "state" : "MA" },

{ "store number" : 2, "state" : "MA" },

{ "store number" : 3, "state" : "CA" },

{ "store number" : 4, "state" : "CA" }

]

let $sales := [

{ "product" : "broiler", "store number" : 1, "quantity" : 20 },

{ "product" : "toaster", "store number" : 2, "quantity" : 100 },

{ "product" : "toaster", "store number" : 2, "quantity" : 50 },

{ "product" : "toaster", "store number" : 3, "quantity" : 50 },

{ "product" : "blender", "store number" : 3, "quantity" : 100 },

{ "product" : "blender", "store number" : 3, "quantity" : 150 },

{ "product" : "socks", "store number" : 1, "quantity" : 500 },

{ "product" : "socks", "store number" : 2, "quantity" : 10 },

{ "product" : "shirt", "store number" : 3, "quantity" : 10 }

]

let $join :=

for $store in $stores[], $sale in $sales[]

where $store."store number" = $sale."store number"

return {

"nb" : $store."store number",

"state" : $store.state,

"sold" : $sale.product

}

return [$join]

""");

print(seq.json());

seq = rumble.jsoniq("""

for $product in json-lines("http://rumbledb.org/samples/products-small.json", 10)

group by $store-number := $product.store-number

order by $store-number ascending

return {

"store" : $store-number,

"products" : [ distinct-values($product.product) ]

}

""");

print(seq.json());res = rumble.jsoniq('$a.Name');res = rumble.jsoniq('$a.Name', a=df);modes = res.availableOutputs();

for mode in modes:

print(mode)df = res.df();

df.show();df.createTempView("myview")

df2 = spark.sql("SELECT * FROM myview").toDF("name");

df2.show();rumble.bind('$b', df2);

seq2 = rumble.jsoniq("for $i in 1 to 5 return $b");

df3 = seq2.df();

df3.show();rumble.bind('$b', df3);

seq3 = rumble.jsoniq("$b[position() lt 3]");

df4 = seq3.df();

df4.show();%%jsoniq -pdf

for $i in 1 to 10000000

return { "foobar" : $i}%%jsoniq -df

for $i in 1 to 10000000

return { "foobar" : $i}%%jsoniq -pdf

declare type local:mytype as {

"product" : "string",

"store-number" : "int",

"quantity" : "decimal"

};

validate type local:mytype* {

for $product in json-lines("http://rumbledb.org/samples/products-small.json", 10)

where $product.quantity ge 995

return $product

}%%jsoniq -t

for $i in 1 to 10000000

return { "foobar" : $i}conf.setPrintIteratorTree(True)rumble = RumbleSession.builder

.config("spark.driver.memory", "10g")

.getOrCreate()Update expressions can also appear outside of a copy-modify-return expression, in which case they propagate and/or persist directly to their targets, to the extent that the context makes it meaningful and possible.

copy $obj := { "foo" : "bar", "bar" : [ 1,2,3 ] }

modify (

insert json { "bar" : 123, "foobar" : [ true, false ] } into $obj,

delete json $obj.bar,

replace value of json $obj.foo with true

)

return $obj

This section assumes that you have installed RumbleDB with one of the proposed ways, and guides you through your first queries.

Create, in the same directory as RumbleDB to keep it simple, a file data.json and put the following content inside. This is a small list of JSON objects in the JSON Lines format.

If you want to later try a bigger version of this data, you can also download a larger version with 100,000 objects from here. Wait, no, in fact you do not even need to download it: you can simply replace the file path in the queries below with "https://rumbledb.org/samples/products-small.json" and it will just work! RumbleDB feels just at home on the Web.

RumbleDB also scales without any problems to datasets that have millions or (on a cluster) billions of objects, although of course, for billions of objects HDFS or S3 are a better idea than the Web to store your data, for obvious reasons.

In the JSON Lines format that this simple dataset uses, you just need to make sure you have one object on each line (this is different from a plain JSON file, which has a single JSON value and can be indented). Of course, RumbleDB can read plain JSON files, too (with json-doc()), but below we will show you how to read JSON Line files, which is how JSON data scales.

Depending on your installation method, the JSONiq queries will go to:

A cell in a jupyter notebook and with the %%jsoniq magic: a simple click is sufficient to execute.

The shell: type the query, and finish by pressing Enter twice.

In a Python program, inside a rumble.jsoniq() call of which you can exploit the output with more Python code.

A JSONiq query file, which you can execute with the RumbleDB CLI interface.

Either way, the meaning of the queries is the same.

or

or

The above queries do not actually use Spark. Spark is used when the I/O workload can be parallelized. The following query should output the file created above.

json-lines() reads its input in parallel, and thus will also work on your machine with MB or GB files (for TB files, a cluster will be preferable). You should specify a minimum number of partitions, here 10 (note that this is a bit ridiculous for our tiny example, but it is very relevant for larger files), as locally no parallelization will happen if you do not specify this number.

The above creates a very simple Spark job and executes it. More complex queries will create several Spark jobs. But you will not see anything of it: this is all done behind the scenes. If you are curious, you can go to in your browser while your query is running (it will not be available once the job is complete) and look at what is going on behind the scenes.

Data can be filtered with the where clause. Again, below the hood, a Spark transformation will be used:

RumbleDB also supports grouping and aggregation, like so:

RumbleDB also supports ordering. Note that clauses (where, let, group by, order by) can appear in any order. The only constraint is that the first clause should be a for or a let clause.

Finally, RumbleDB can also parallelize data provided within the query, exactly like Sparks' parallelize() creation:

Mind the double parenthesis, as parallelize is a unary function to which we pass a sequence of objects.

The docker installation is kindly contributed by Dr. Ingo Müller (Google).

On occasion, the docker version of RumbleDB used to throw a Kryo NoSuchMethodError on some systems. This should be fixed with version 2.0.0, let us know if this is not the case.

You can upgrade to the newest version with

Docker is the easiest way to get a standard environment that just works.

You can download Docker from .

Then, in a shell, type, all on one line:

The first time, it might take some time to download everything, but this is all done automatically. Subsequent commands will run immediately.

When there are new RumbleDB versions, you can upgrade with:

The RumbleDB shell appears:

You can now start typing simple queries like the following few examples. Press three times the return key to execute a query.

or

or

The above queries do not actually use Spark. Spark is used when the I/O workload can be parallelized. The following query should output the file created above.

json-lines() reads its input in parallel, and thus will also work on your machine with MB or GB files (for TB files, a cluster will be preferable). You should specify a minimum number of partitions, here 10 (note that this is a bit ridiculous for our tiny example, but it is very relevant for larger files), as locally no parallelization will happen if you do not specify this number.

The above creates a very simple Spark job and executes it. More complex queries will create several Spark jobs. But you will not see anything of it: this is all done behind the scenes. If you are curious, you can go to in your browser while your query is running (it will not be available once the job is complete) and look at what is going on behind the scenes.

Data can be filtered with the where clause. Again, below the hood, a Spark transformation will be used:

RumbleDB also supports grouping and aggregation, like so:

RumbleDB also supports ordering. Note that clauses (where, let, group by, order by) can appear in any order. The only constraint is that the first clause should be a for or a let clause.

Finally, RumbleDB can also parallelize data provided within the query, exactly like Sparks' parallelize() creation:

Mind the double parenthesis, as parallelize is a unary function to which we pass a sequence of objects.

You can also run the docker as a server like so:

You can change the port to something else than 8001 at all three places it appears. Do not forget -p 8001:8001 that forwards the port to the outside of the docker. Then, you can use a connected to the RumbleDB docker server to write queries in it. Point the notebook to http://localhost:8001/jsoniq in the appropriate cell (or any other port).

In order to query your local files, you need to mount a local directory to a directory within the docker. This is done with the --mount option, and the source path must be absolute. For the target, you can pick anything that makes sense to you.

For example, imagine you have a file products-small.json in the directory /path/to/my/directory. Then you need to run RumbleDB with:

Then you can go ahead and use absolute paths in the target directory in input functions, like so:

You can also mount a local directory in this way running it as a server rather than a shell.

After you have tried RumbleDB locally as explained in the getting started section, you can take RumbleDB to a real cluster simply by modifying the command line parameters as documented here for spark-submit.

Creating a cluster is the easiest part, as most cloud providers today offer that with just a few clicks: Amazon EMR, Azure HDInsight, etc. You can start with 4-5 machines with a few CPUs each and a bit of memory, and increase later when you want to get serious on larger scales.

Maybe sure to select a cluster that has Apache Spark. On Amazon EMR, this is not the default and you need to make sure that you check the box that has Spark below the cluster version dropdown. We recommend taking the latest EMR version 6.5.0 and then picking Spark 3.1 in the software configuration. You will also need to create a public/private key pair if you do not already have one.

Wait for 5 or 6 minutes, and the cluster is ready.

Do not forget to terminate the cluster when you are done!

Next, you need to use ssh to connect to the master node of your cluster as the hadoop user and specifying your private key file. You will find the hostname of the machine on the EMR cluster page. The command looks like:

ssh -i ~/.ssh/yourkey.pem [email protected]

If ssh hangs, then you may need to authorize your IP for incoming connections in the security group of your cluster.

And once you have connected with ssh and are on the shell, you can start using RumbleDB in a way similar to what you do on your laptop.

First you need to download it with wget (which is usually available by default on cloud virtual machines):

This is all you need to do, since Apache Spark is already installed. If spark-submit does not work, you might want to wait for a few more minutes as it might be that the cluster is not fully prepared yet.

Often, the Spark cluster is running on yarn. The --master option can be changed from local[*] (which was for running on your laptop) to yarn compared to the getting started guide.

Most of the time, though (e.g., on Amazon EMR), it needs not be specified, as this is already set up in the environment. So the same command will do:

When you are on a cluster, you can also adapt the number of executors, how many cores you want per executor, etc. It is recommended to use sqrt(n) cores per executor if a node has n cores. For the executor memory, it is just primary school math: you need to divide the memory on a machine with the number of executors per machine (which is also roughly sqrt(n)).

For example, if we have 6 worker nodes with each 16 cores and 64 GB, we can use 5 executores on each machine, with 3 cores and 10 GB per executor. This leaves a core and a bit of memory free for other cluster tasks.

If necesasry, the size limit for materialization can be made higher with --materialization-cap or its shortcut -c (the default is 200). This affects the number of items displayed on the shells as an answer to a query. It also affects the maximum number of items that can be materialized from a large sequence into, say, an array. Warnings are issued if the cap is reached.

json-lines() then takes an HDFS path and the host and port are optional if Spark is configured properly. A second parameter controls the minimum number of splits. By default, each HDFS block is a split if executed on a clustter. In a local execution, there is only one split by default.

The same goes for parallelize(). It is also possible to read text with text-file(), parquet files with parquet-file(), and it is also possible to read data on S3 rather than HDFS for all three functions json-lines(), text-file() and parquet-file().

If you need a bigger data set out of the box, we recommend the , which has 16 million objects. On Amazon EMR, we could even read several billion of objects on less than ten machines.

We tested this with each new release, and suggest the following queries to start with (we assume HDFS is the default file system, and that you copied over this dataset to HDFS with hadoop fs copyFromLocal):

Note that by default only the first 200 items in the output will be displayed on the shell, but you can change it with the --materialization-cap parameter on the CLI.

RumbleDB also supports executing a single query from the command line, reading from HDFS and outputting the results to HDFS, with the query file being either local or on HDFS. For this, use the --query-path (optional as any text without parameter is recognized as a path in any case), --output-path (shortcut -o) and --log-path parameters.

The query path, output path and log path can be any of the supported schemes (HDFS, file, S3, WASB...) and can be relative or absolute.

As in most language, one can distinguish between physical equality and logical equality.

Atomics can only be compared logically. Their physically identity is totally opaque to you.

Result (run with Zorba):true

Result (run with Zorba):false

Result (run with Zorba):false

Result (run with Zorba):true

Two objects or arrays can be tested for logical equality as well, using deep-equal(), which performs a recursive comparison.

Result (run with Zorba):true

Result (run with Zorba):false

The physical identity of objects and arrays is not exposed to the user in the core JSONiq language itself. Some library modules might be able to reveal it, though.

Module

You can group functions and variables in separate library modules.

MainModule

Up to now, everything we encountered were main modules, i.e., a prolog followed by a main query.

LibraryModule

A library module does not contain any query - just functions and variables that can be imported by other modules.

A library module must be assigned to a namespace. For convenience, this namespace is bound to an alias in the module declaration. All variables and functions in a library module must be prefixed with this alias.

ModuleImport

Here is a main module which imports the former library module. An alias is given to the module namespace (my). Variables and functions from that module can be accessed by prefixing their names with this alias. The alias may be different than the internal alias defined in the imported module.

Result (run with Zorba):1764

JSONiq is a query language that was specifically designed for querying JSON, although its data model is powerful enough to handle more similar formats.

As stated on json.org, JSON is a "lightweight data-interchange format. It is easy for humans to read and write. It is easy for machines to parse and generate."

A JSON document is made of the following building blocks: objects, arrays, strings, numbers, booleans and nulls.

JSONiq manipulates sequences of these building blocks, which are called items. Hence, a JSONiq value is a sequence of items.

Any JSONiq expression takes and returns sequences of items.

Comma-separated JSON-like building blocks is all you need to begin building your own sequences. You can mix and match, as JSONiq supports heterogeneous sequences seamlessly.

By default, the memory allocated is limited. This depends on whether you run RumbleDB with the standalone jar or as the thin jar in a Spark environment.

If you run RumbleDB with a standalone jar, then your laptop will allocate by default one quarter of your total working memory. You can check this with:

In order to increase the memory, you can use -Xmx10g (for 10 GB, but you can use any other value):

If you run RumbleDB on your laptop (or a single machine) with the thin jar, then by default this is limited to around 2 GB, and you can change this with --driver-memory

Even though you can build your own JSON values with JSONiq by copying-and-pasting JSON documents, most of the time, your JSON data will come from an external input dataset.

How this dataset is access depends on the JSONiq implementation and of the context. Some engines can read the data from a file located on a file system, local or distributed (HDFS, S3); some others get data from the Web; some others are full-fledged datastores and have collections that can be created, queried, modified and persisted.

It is up to each engine to document which functions should be used, and how, in order to read datasets into a JSONiq Data Model instance. These functions will take implementation-defined parameters and typically return sequences of objects, or sequences of strings, or sequences of items, etc.

For the purpose of examples given in this specification, we assume that a hypothetical engine has collections that are sequences of objects, identified by a name which is a string. We assume that there is a collection() function that returns all objects associated with the provided collection name.

We assume in particular that there are three example collections, shown below.

{ "product" : "broiler", "store number" : 1, "quantity" : 20 }

{ "product" : "toaster", "store number" : 2, "quantity" : 100 }

{ "product" : "toaster", "store number" : 2, "quantity" : 50 }

{ "product" : "toaster", "store number" : 3, "quantity" : 50 }

{ "product" : "blender", "store number" : 3, "quantity" : 100 }

{ "product" : "blender", "store number" : 3, "quantity" : 150 }

{ "product" : "socks", "store number" : 1, "quantity" : 500 }

{ "product" : "socks", "store number" : 2, "quantity" : 10 }

{ "product" : "shirt", "store number" : 3, "quantity" : 10 }docker pull rumbledb/rumble

1 eq 1

Result:foo 2 true foo bar null [ 1, 2, 3 ]

Sequences are flat and cannot be nested. This makes streaming possible, which is very powerful.

Result:foo 2 true 4 null 6

A sequence can be empty. The empty sequence can be constructed with empty parentheses.

Result:

A sequence of just one item is considered the same as just this item. Whenever we say that an expression returns or takes one item, we really mean that it takes a singleton sequence of one item.

Result:foo

JSONiq classifies the items mentioned above in three categories:

Atomic items: strings, numbers, booleans and nulls, but also many other supported atomic values such as dates, binary, etc.

Structured items: objects and arrays.

Function items: items that can take parameters and, upon evaluation, return sequences of items.

The JSONiq data model follows the W3C specification, but, in core JSONiq, does not include XML nodes, and includes instead JSON objects and arrays. Engines are free, however, to optionally support XML nodes in addition to JSON objects and arrays.

An atomic is a non-structured value that is annotated with a type.

JSONiq atomic values follow the W3C specification.

JSONiq supports most atomic values available in the W3C specification. They are described in Chapter The JSONiq type system. JSONiq furthermore defines an additional atomic value, null, with a type of its own, jn:null, which does not exist in the W3C specification.

In particular, JSONiq supports all core JSON values. Note that JSON numbers correspond to three different types in JSONiq.

string: all JSON strings.

integer: all JSON numbers that are integers (no dot, no exponent), infinite range.

decimal: all JSON numbers that are decimals (no exponent), infinite range.

double: IEEE double-precision 64-bit floating point numbers (corresponds to JSON numbers with an exponent).

boolean: the JSON booleans true and false.

null: the JSON null.

Structured items in JSONiq do not follow the XQuery 3.1 standard but are specific to JSONiq.

In JSONiq, an object represents a JSON object, i.e., a collection of string/items pairs.

Objects have the following property:

pairs. A set of pairs. Each pair consists of an atomic value of type xs:string and of an item.

[ Consistency constraint: no two pairs have the same name (using fn:codepoint-equal). ]

The XQuery data model uses accessors to explain the data model. Accessors are not exposed to the user and are only used for convenience in this specification. Objects have the following accessors:

jdm:object-keys($o as js:object) as xs:string*: returns all keys in the object $o.

jdm:object-value($o as js:object, $s as xs:string) as js:item: returns the value associated with $s in the object $o.

An object does not have a typed value.

In JSONiq, an array represents a JSON array, i.e., a ordered list of items.

Objects have the following property:

members. An ordered list of items.

Arrays have the following accessors:

jdm:array-size($a as js:array) as xs:nonNegativeInteger: returns the number of values in the array $a.

jdm:array-value($a as js:array, $i as xs:positiveInteger) as js:item: returns the value at position $i in the array $a..

An array does not have a typed value.

Unlike in the XQuery 3.1 standard, the values in arrays and objects are single items (which disallows the empty sequence or a sequence of more than one item). Also, object keys must be strings (which disallows any other atomic value).

JSONiq also supports function items, also known as higher-order functions. A function item can be passed parameters and evaluated.

A function item has an optional name and an arity. It also has a signature, which consists of the sequence type of each one of its parameters (as many as its arity), and the sequence type of the values it returns.

The fact that functions are items means that they can be returned by expressions, and passed as parameters to other functions. This is why they are also often called higher-order functions.

JSONiq function items follow the W3C specification.

If you run RumbleDB on a cluster, then the memory needs to be allocated to the executors, not the driver:

Setting things up on a cluster requires more thinking because setting the executor memory should be done in conjunction with setting the total number of executors and the number of cores per executor. This highly depends on your cluster hardware.

RumbleDB does not currently support paths with a whitespace. Make sure to put your data and modules at paths without whitespaces.

If this happens, you can download winutils.exe to solve the issue as explained here.

This is a known issue under investigation. It is related to a version conflict between Kryo 4 and Kryo 5 that occasionally happens on some docker installations. We recommend trying a local installation instead, as described in the Getting Started section.

A very common issue leading to some errors is using the wrong Java version. With Spark 3.5, only Java 11 or 17 is supported. With Spark 4, Java 17 or 21 are supported.

You should make sure in particular you are not using a more recent Java version. Multiple Java versions can normally co-habit on the same machine but you need to make sure to set the JAVA_HOME variable appropriately.

It is easy to check the Java version with:

Sometimes, a sequence can be very long, and materializing it to a tuple of JSON values or a tuple of native items can fail because of the materialization cap. While it can be changed in the configuration to allow for larger tuples, this does not scale.

Another way to retrieve a sequence of arbitrary length is to use the iterator API to stream through the items one by one. If you do not keep previous values in memory, there is no limit to the sequence size than can be retrieved in this way (but it may take more time than using RDDs or DataFrames, which benefit from parallelism).

This is how to stream through the items converted to JSON one by one:

This is how to stream through the native items, using the Item API:

Sometimes, it is not possible to retrieve the output sequence as a (pandas or pyspark) DataFrame because no schema could be inferred. This is notably the case if the output sequence is heterogeneous (such as a sequence of items mixing atomics, objects of various structures, arrays, etc).

And materializing or streaming may not be an option either if there are billions of items.

In this case, it is possible to obtain the output as an RDD instead. This gets an RDD of JSON values that can be processed by Python (using the type mapping).

The rdd() method is experimental because we had to reverse-engineer how pyspark encodes RDDs for the Java Virtual Machine (pickling).

list = res.items();

for result in list:

print(result.getStringValue())Result

Result

Result

collection("one-object")

{ "foo" : "bar" }"Hello, World" 1 + 1

(3 * 4) div 5

json-lines("data.json")

for $i in json-lines("data.json", 10)

return $ifor $i in json-lines("data.json", 10)

where $i.quantity gt 99

return $ifor $i in json-lines("data.json", 10)

let $quantity := $i.quantity

group by $product := $i.product

return { "product" : $product, "total-quantity" : sum($quantity) }for $i in json-lines("data.json", 10)

let $quantity := $i.quantity

group by $product := $i.product

let $sum := sum($quantity)

order by $sum descending

return { "product" : $product, "total-quantity" : $sum }for $i in parallelize((

{ "product" : "broiler", "store number" : 1, "quantity" : 20 },

{ "product" : "toaster", "store number" : 2, "quantity" : 100 },

{ "product" : "toaster", "store number" : 2, "quantity" : 50 },

{ "product" : "toaster", "store number" : 3, "quantity" : 50 },

{ "product" : "blender", "store number" : 3, "quantity" : 100 },

{ "product" : "blender", "store number" : 3, "quantity" : 150 },

{ "product" : "socks", "store number" : 1, "quantity" : 500 },

{ "product" : "socks", "store number" : 2, "quantity" : 10 },

{ "product" : "shirt", "store number" : 3, "quantity" : 10 }

), 10)

let $quantity := $i.quantity

group by $product := $i.product

let $sum := sum($quantity)

order by $sum descending

return { "product" : $product, "total-quantity" : $sum }docker run -i rumbledb/rumble repl

docker pull rumbledb/rumble ____ __ __ ____ ____

/ __ \__ ______ ___ / /_ / /__ / __ \/ __ )

/ /_/ / / / / __ `__ \/ __ \/ / _ \/ / / / __ | The distributed JSONiq engine

/ _, _/ /_/ / / / / / / /_/ / / __/ /_/ / /_/ / 2.0.0 "Lemon Ironwood" beta

/_/ |_|\__,_/_/ /_/ /_/_.___/_/\___/_____/_____/

App name: spark-rumble-jar-with-dependencies.jar

Master: local[*]

Driver's memory: (not set)

Number of executors (only applies if running on a cluster): (not set)

Cores per executor (only applies if running on a cluster): (not set)

Memory per executor (only applies if running on a cluster): (not set)

Dynamic allocation: (not set)

Item Display Limit: 200

Output Path: -

Log Path: -

Query Path : -